PRESENTATION

View our full project presentation and supporting materials.

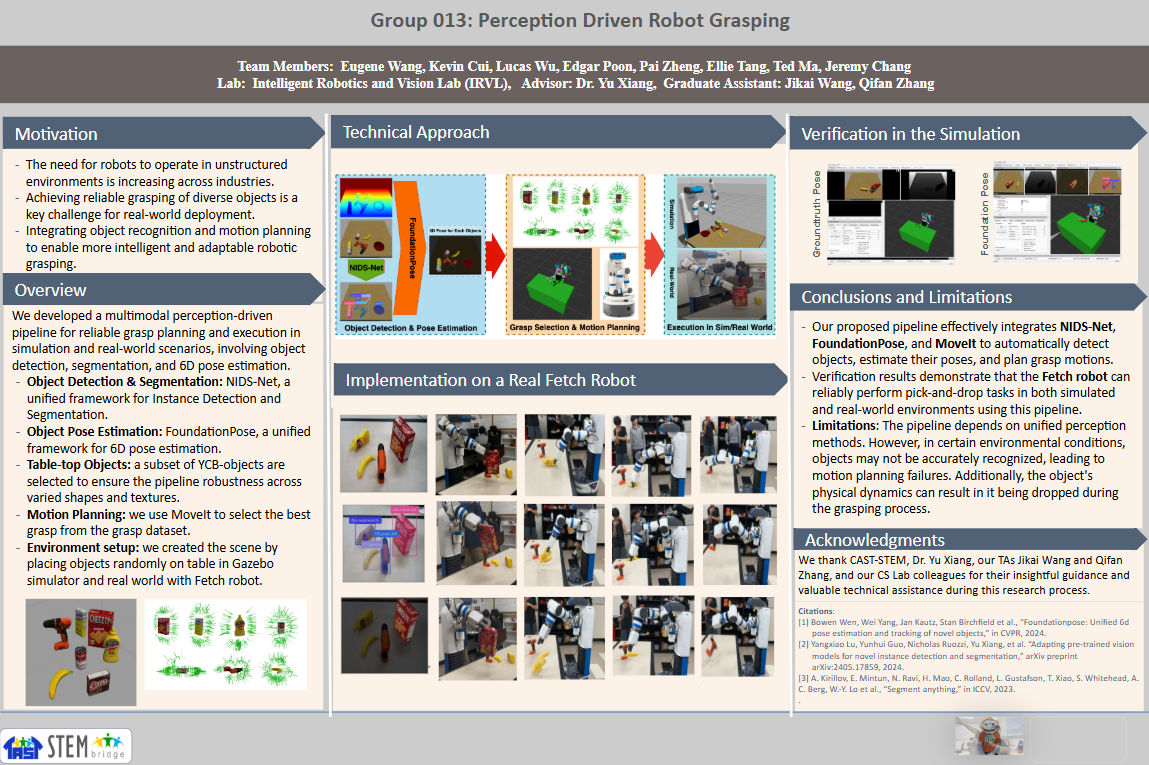

POSTER

Dive into the qualitative and quantitative analysis conducted throughout the project

Perception Driven Robot Grasping | Simulation Data | Testing Results

PROJECT SYNOPSIS

Our project's overarching focus was to estimate hand and object poses from data collected in a multi-camera system. We configured and tested an end-to-end pipeline for simultaneously tracking hand and object poses from collected recordings as commonly used in AR/VR (XR), robotic and computer vision, security, etc.

CORE SKILLS LEARNED

Before diving into the main system, we built a strong technical foundation. We learned Python, PyTorch for machine learning, Docker for containerized environments, and Ubuntu for a Linux-based workflow. We also studied essential linear algebra concepts like coordinate transformations and matrix operations, which are crucial for pose estimation and 3D geometry.

Perception Driven Robot Grasping | Simulation Data | Testing Results

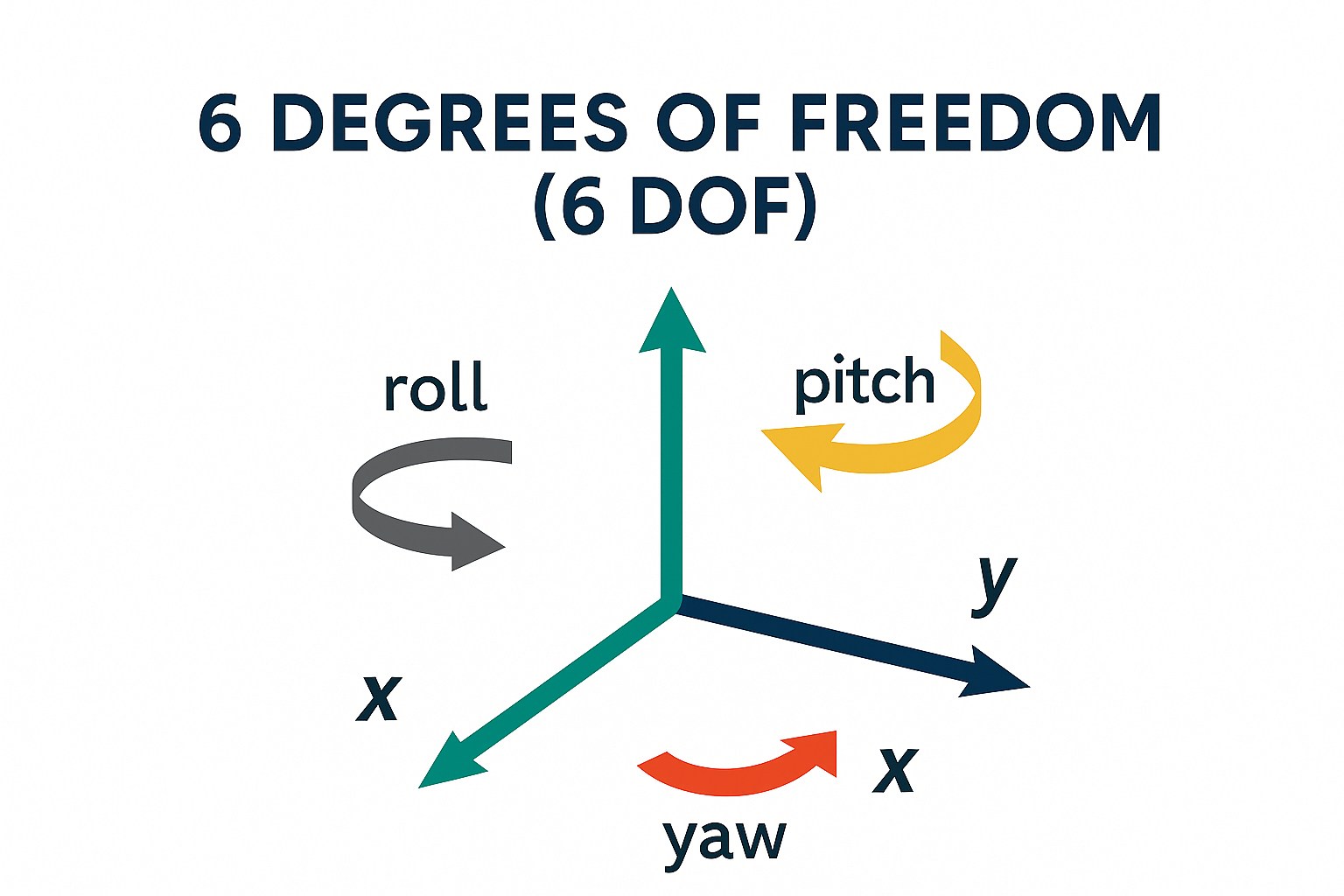

6 Degrees of Freedom (6 DoF)

To accurately determine the position and orientation of any object in 3D space, we studied the six degrees of freedom: translation along the x, y, and z axes (forward/backward, left/right, up/down), and rotation around those same axes (roll, pitch, and yaw). Mastering these concepts allowed us to understand how robots interpret object locations and orientations for precise manipulation.

Robot Operating System (ROS)

We integrated ROS (Robot Operating System) to bridge our perception pipeline with the physical robot. ROS manages communication between various hardware and software components using a modular system of nodes, topics, messages, and services. For example, one node might process camera images, while another controls the robot gripper, all coordinated through ROS’s communication channels.

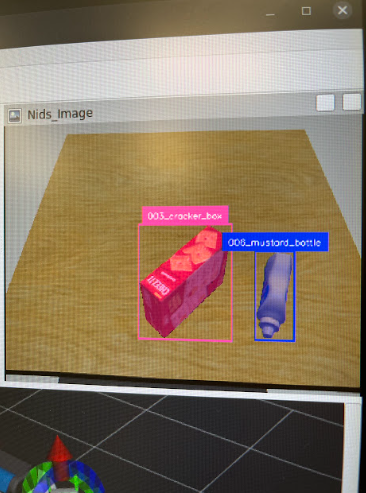

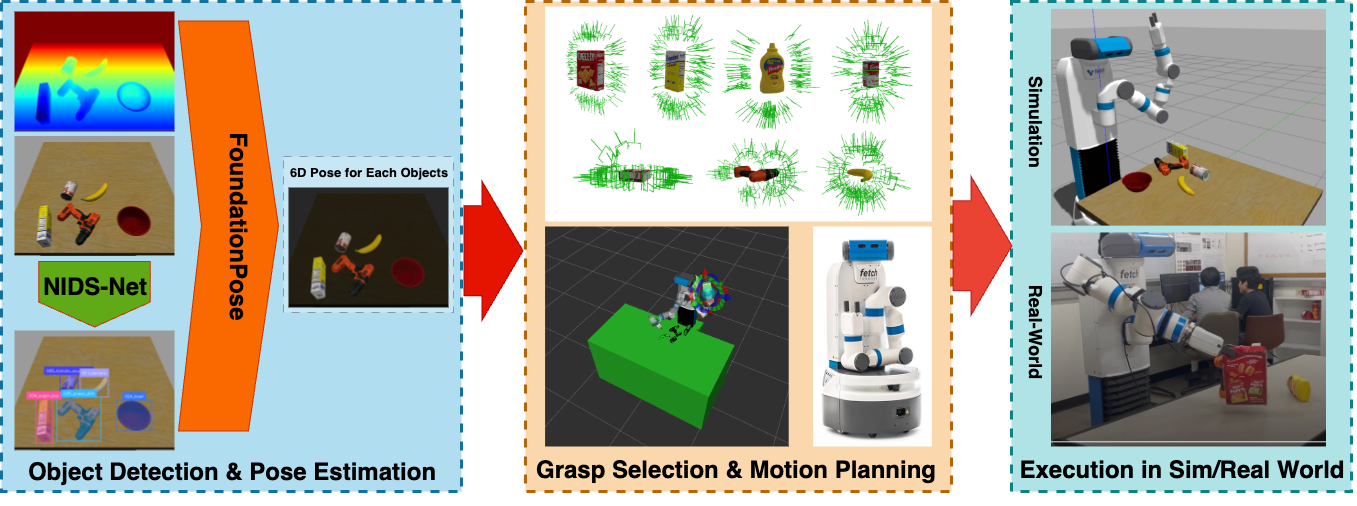

Perception Pipeline: NIDS-Net + FoundationPose

Our pipeline begins with NIDS-Net, a neural network used to segment objects in RGB-D images. These segmented outputs are passed into FoundationPose, a model that estimates the full 6D pose of objects. Together, they provide the spatial awareness needed for the robot to plan grasping actions based on visual input.

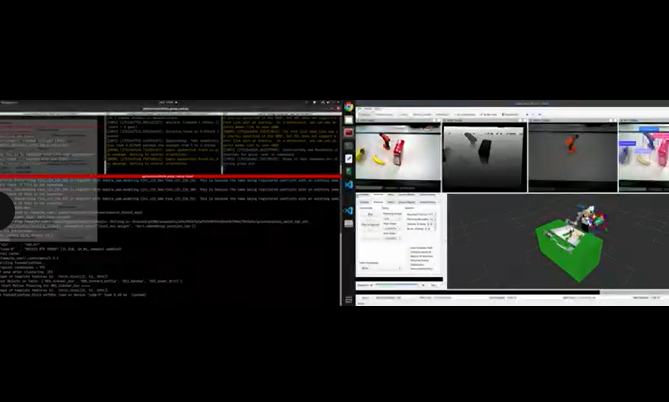

Simulating with Gazebo

Before deploying on the physical robot, we tested the system inside Gazebo, a powerful robotics simulator. It allowed us to validate the full grasping pipeline in a safe, virtual environment. This helped us fine-tune object segmentation and pose estimation outputs before performing real-world grasps on the Fetch robot.

Grasp Execution on the Fetch Robot

We deployed our pipeline on a real Fetch robot. The robot received RGB-D data, segmented the scene, estimated object poses, and calculated grasp positions. Grasping was successful in most cases. However, challenges arose with cluttered scenes and curved or reflective objects, where pose estimation accuracy could drop due to sensor or model limitations.

OVERVIEW

Hand-Object Interaction

Developing models to understand and predict how hands manipulate objects in 3D space

3D Pose Estimation

Creating algorithms to estimate the 3D position and orientation of objects from images

Robotic Manipulation

Translating computer vision research into robotic control systems

PROCESS

- Core Skills Learned: PyTorch, Docker, Ubuntu, Python libraries, and basic linear algebra.

- 6 DoF Understanding: Learned how 3D position and rotation define an object’s pose for robotic grasping.

- ROS Setup: Connected Fetch robot’s hardware components via Robot Operating System for synchronized control.

- Team Division: Hand tracking used MediaPipe and HaMeR; object tracking used NIDS-Net and FoundationPose.

- Pipeline Integration: Combined segmentation, 6D pose estimation, and robot grasp execution into one system.

- Simulation Testing: Validated the grasping system using Gazebo before moving to physical deployment.

- Real Robot Execution: Deployed the pipeline on the Fetch robot to grasp real objects.

- Observed Limitations: System lacked reactive feedback and struggled with curved objects or cluttered scenes.

PROJECT VIDEO

Group 13 Presentation Video by Ellie Tang

RESOURCES

- GitHub - Source code repository

-

Research Papers:

- Bowen Wen, Wei Yang, Jan Kautz, Stan Birchfield et al., “Foundationpose: Unified 6d pose estimation and tracking of novel objects,” in CVPR, 2024

- Yangxiao Lu, Yunhui Guo, Nicholas Ruozzi, Yu Xiang, et al. “Adapting pre-trained vision models for novel instance detection and segmentation,” arXiv preprint arXiv:2405.17859, 2024